Table of Contents

They translate, summarize, write, code, debate, and teach. Invisible yet omnipresent, LLMs (Large Language Models) are now shaping our relationship with language and artificial intelligence. What are they? How do they work? What are their uses, what are the risks, and what is the future? An investigation into the digital brains that speak to us.

What is an LLM?

Behind this somewhat cold acronym—LLM, for Large Language Model —lies one of the most spectacular advances in contemporary artificial intelligence. These "large language models" are algorithms capable of understanding, generating, and manipulating human language with a realism that defies intuition.

An LLM is not a conscious robot, nor is it an entity with intelligence in the biological sense. It is a statistical model trained on huge volumes of text to predict, at each step, the most likely word in a sentence. And this simple task— predicting the next word —is now enough to produce stunning dialogue, coherent essays, computer code, poems, and even preliminary medical diagnoses.

How do LLMs work?

LLMs are based on a technology that revolutionized natural language processing: transformers , introduced in 2017 by a team of Google researchers in a seminal paper titled Attention Is All You Need .

These models are trained using billions of words collected from the web, books, scientific articles, forums, wikis, and more. Training involves exposing the model to these texts and asking it to guess the next word at each moment. To succeed in this task, the model learns to capture regularities, grammatical structures, cultural references, idioms, and even writing styles.

The larger a model is (in terms of the number of parameters , sometimes several hundred billion), the more it becomes capable of producing language with fluidity and nuance. GPT-4, Claude 2 or Gemini 1.5 are among these giants, whose performance is already superior to that of many experts in certain linguistic tasks.

Universal Assistants: The Roles of LLMs

If we had to sum up their usefulness in one word: versatility . LLMs are designed to be language generalists, and this ability opens the way to a multitude of applications.

- Automatic writing : articles, emails, reports, video scripts, speeches…

- Education : learning assistance, explanation of concepts, exam preparation.

- Translation : in real time or delayed, with quality that rivals specialized tools.

- Search : Extracting precise information from large bodies of data.

- Computer development : code generation, debugging, documentation.

- Artistic creation : scenarios, poems, word games, music…

In some sectors, such as medicine, law or finance, these models are already integrated into workflows to assist professionals in analyzing complex documents.

The undeniable advantages of LLMs

Their strength comes from their ability to understand context , adapt to different tones and intentions , and produce text in dozens of languages . Unlike old, rigid chatbots, LLMs can hold nuanced, contextualized conversations, and even invent credible stories.

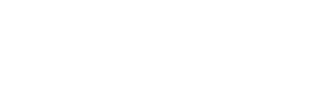

They are also accessible via online interfaces (ChatGPT, Claude.ai, Gemini), or APIs that companies can integrate into their products. They allow millions of users, often without technical skills, to benefit from powerful tools to help with thinking, writing or organization.

In education, for example, a dyslexic student can use it as a reading tutor . A teacher can generate tailored lesson plans. A developer can communicate in natural language with their code. The revolution is quiet, but it is moving forward.

Limits not to be neglected

But these models are not without flaws. Their hallucinations —that is, their tendency to produce invented but plausible answers—pose problems in critical uses (medicine, journalism, law).

LLMs can also reproduce biases present in their training data: sexism, racism, cultural stereotypes. Although efforts are made to correct them, these biases are systemic and difficult to eradicate completely.

Another sticking point: the environmental impact of their training. Millions of GPU hours are required, with a worrying carbon footprint. Finally, their rapid integration raises ethical and social questions : misinformation, surveillance, and the dehumanization of certain professions.

Overview of dominant models

Here are some of the most influential LLMs to date:

- GPT-4 and GPT-4o (OpenAI) : multimodal models capable of processing text, images, audio.

- Claude 2/3 (Anthropic) : focused on security and “harmlessness”.

- Gemini (Google DeepMind) : integrated with Google tools, multimodal performance.

- LLaMA 3 (Meta) : open source, powerful and customizable.

- Mistral (Europe) : champions of lightness and efficiency.

- Command R (Cohere), PaLM (Google), Yi, Baichuan… : emerging alternatives.

Some models are closed (proprietary), others are open source, opening the way to more decentralized and ethical uses.

The future: towards dialogic and multimodal intelligence

LLMs are no longer just text generators. They are becoming multimodal , capable of understanding and generating images, audio, and video. OpenAI has launched GPT-4o, which can interact via voice, in real time, with synthetic emotional expressions.

We are also seeing the emergence of AI agents capable of acting autonomously on the web, using tools, and interacting with software. LLMs are thus becoming operational brains, more than simple textual assistants.

In the medium term, the future is shaped by three axes: personalization , energy efficiency , and interpretability . The ability to adapt an LLM to a specific context or person—without loss of security—may well define the next decade.

Conclusion

LLMs are not a passing fad. They are profoundly redefining our relationship with language, knowledge, and creativity. They also pose dizzying challenges—ethical, ecological, and educational. Like any major technology, they are ambivalent: neither good nor bad in essence, but powerful, and therefore demanding in terms of governance.

Understanding how they work, their limitations, and their promises is now a basic skill in a world where the lines between human and machine are becoming more porous than ever.

Comments are closed.